So symmetry physics, according to this particle physics chapter I'm looking at, is a powerful computational tool for physics because it is about the most compact possible ways to compute and organize physical laws and their resulting complexities. Uh did somebody say optimization? Quantum Gravity Research's work is another great example of this with their quantum spin networks, geometric models that have interesting and frankly spooky combinatorial properties that give real-world solutions for physics.

Let's connect that to neural nets, starting from the physics side.

Symmetries are properties that have real influence on outcomes of systems but do not influence other properties. The classic example is the Stern-Gerlach experiment, where atoms fired through a polarized field only tended to one of two spots, demonstrating atomic spin was a quantum process, as in something observed that can be constrained to a list of possible states that relationships in the real world can then be drawn from precisely. Examples: quantum mechanics gave us modern computers, power grids, networks, and particle physics.

To connect symmetry to natural processes we need to look to thermodynamics. In nature, systems tend toward maximum entropy. Entropy defines the number of possible states the system can be in. A ball of hot gas has very high entropy, while an ice cube has very low entropy. One changes rapidly because all the atoms are moving past each other rapidly, while the other barely changes due to being a solid crystal structure. Lower entropy systems take more energy to change, think of it like how you can push your hand through a gas easily but can't push it through an ice cube due to the rigid structure. Also think of it as a system that is less differentiated has less possibilities, and if you think of energy as having a set amount like it does in nature, then possibilities represent the presence of fluctuations in that overall energy field, which were probably massive near the big bang as evidenced by the whole quantum wave idea in cosmology.

Maximum entropy means the system or particle could be in the highest possible number of states, or has the highest number of micro-states available to it to express itself. Think of this as what are all the processes internal to the system ("hidden dimensions") that govern what happens between point A and point B in time, and what could they be doing in that given span or in-between moments? A system in a state of maximum entropy, where it could be in any of the possible states, is considered entangled.

These possible states can be organized into phase spaces, and you can mark out all the possible states and measured states like points in a 3D map. You can calculate an abstract volume within the phase space, or the probability of the possible states, then the logarithm of a given state is the Boltzmann Entropy, or the quantity of information in that event. The Shannon entropy is the sum of probabilities of each event multiplied by the logarithm of each probability, or the average information in the given set of events.

One thing that's interesting and quite peculiar, is that there is an information-energy equivalence. This means that the information contained within an event has its own energy. This was demonstrated in the Szilard engine thought experiment, and more recently in the awesome Information-Heat Engine experiment. Why is this useful?

|

| http://colah.github.io/posts/2014-03-NN-Manifolds-Topology |

Remember when I said nature tends toward maximum entropy? Well how do we bring this abstract mumbo jumbo into the real world? When you're generalizing probabilities to solve real world problems, like with neural networks, we now understand a very particular relationship between information, the model, and nature, by how information itself has energy and entropy. You can map probability trajectories (particle movements over time, internal and external) this way and map the entire topology of that system or subsystem in terms of possibilities calculated from more-or-less complete (i.e. testable) datasets, like for image recognition. For growing datasets in adaptive systems, when the measured entropy crosses the maximum entropy of your model after noise reduction, you know your model is incomplete and can extrapolate, at least that's my best understanding so far.

|

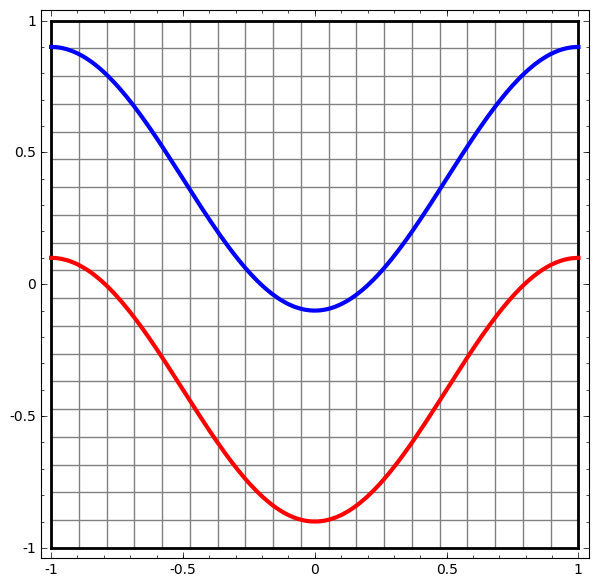

| E.g. extrapolate in a "hidden layer" to separate curves linearly to help solve for various relationships. |

Symmetries don't change when other things change, that's where it comes into this picture. Gauge symmetries are identified as those found near clusters of less complex phenomena, an idea upon which Special Relativity is based, since higher order phenomena tend to be a complexification of lower order phenomena. A 2015 paper identified symmetries, or "translational invariances" present in trained deep learning network data structures for feature extraction. Manifold Tangent Classifiers, or "high-order contractive auto-encoders" (i.e. fancy dimensionality reducers) look for partial differential equations to build a "topological atlas of charts, each chart being characterized by the principal singular vectors of the Jacobian of a representation mapping." This is faster and more accurate than the average Convolutional Neural Network, and competes with the current best models, which use a shit ton more layers to add combinatorial power. If that doesn't say enough, well here's a little more.

|

| MNIST Dataset to identify handwritten numbers. |

|

| Result of a clustering algorithm |

Very recent work identified that control equations for manifolds were solvable on deep residual networks when observing them over time. This means they're following the rules of energy and information flow. This means, to me, that a better neural network will be formulated precisely along these lines. Another very recent work identified a neural network decoder model based on multi-scale entanglement tensor models, and achieved better than state-of-the-art performance for compression with significantly fewer parameters. Yet another latest work created a Restricted Boltzmann Machine neural network that didn't need exponential numbers of nodes to represent more and more highly entangled many-body systems - instead scaling linearly with the number of nodes.

If you can figure out the most optimal way to do something, like how the best neural networks are the fastest, most accurate, and cost-effective, you have thus accounted for all the possible micro-states of that something in some way. Pure combinatorial mathematics are a great way to do that when natural laws are taken advantage of, as the Manifold Tangent Classifier and Restricted Boltzmann Machine show. Mapped with, say, the recurrent LSTM or Residual networks and/or the simplectic CapsNet models, or something better and undiscovered for retaining memories efficiently to map various time-series and topology patterns in data more accurately, this could be a great generalization solution for an adaptive and growing neural net. I will find out, I'm learning Tensorflow and all sorts of other shit, I finally have a good enough grasp I think.

|

| The paranoia is real. |

The Method of Loci refers to an Art of Memory technique generalized as referencing memories on spatial maps, where subjects can "walk" through a mental map, real or imagined, and very successfully recall information that way. The Art of Memory is another subject I will be looking to for inspiration, as I have with the whole of Hermetic thought. Proper mnemonic memory techniques have some fascinating beneficial effects on the brain, even to a naive learner. I imagine it can go the other way, too. A locus in mathematics is a set of points that share a property based on some relationship equation, usually forming a curve or surface in a space. In psychology it's more about how much one controls their own life.

I tend to believe that neural nets get a privileged position of being able to test some of the broader metaphysical or otherwise human experience-based wisdom at work across these fields, if all the relationships I've drawn here are any evidence, ones that seem to be aiding computer and data science, as well as neuroscience and health in now amazing yet perhaps still unimaginable ways by humans. That's what the obvious hype behind AI is all about. I also am now thinking a new kind of evolution may kick off if a computer can figure this kind of stuff out for itself and it turns out it's actually as useful a paradigm as it appears to be for natural learning and memory optimization. That also means it's our responsibility to make sure it's not some ZuccBot advertising overlord bullshit either that kicks this whole thing off.

No comments:

Post a Comment